Amazon has reached a remarkable milestone by deploying its one-millionth robot across global fulfillment and sortation centers, solidifying its position as the world’s largest operator of industrial mobile robotics. This achievement coincides with the launch of DeepFleet, a groundbreaking suite of foundation models designed to enhance coordination among vast fleets of mobile robots. Trained on billions of hours of real-world operational data, these models promise to optimize robot movements, reduce congestion, and boost overall efficiency by up to 10%.

The Rise of Foundation Models in Robotics

Foundation models, popularized in language and vision AI, rely on massive datasets to learn general patterns that can be adapted to various tasks. Amazon is applying this approach to robotics, where coordinating thousands of robots in dynamic warehouse environments demands predictive intelligence beyond traditional simulations.

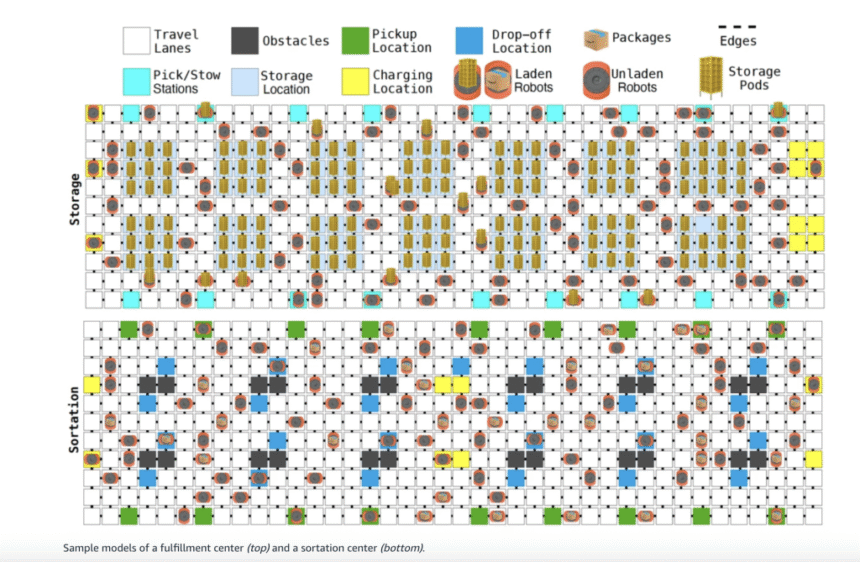

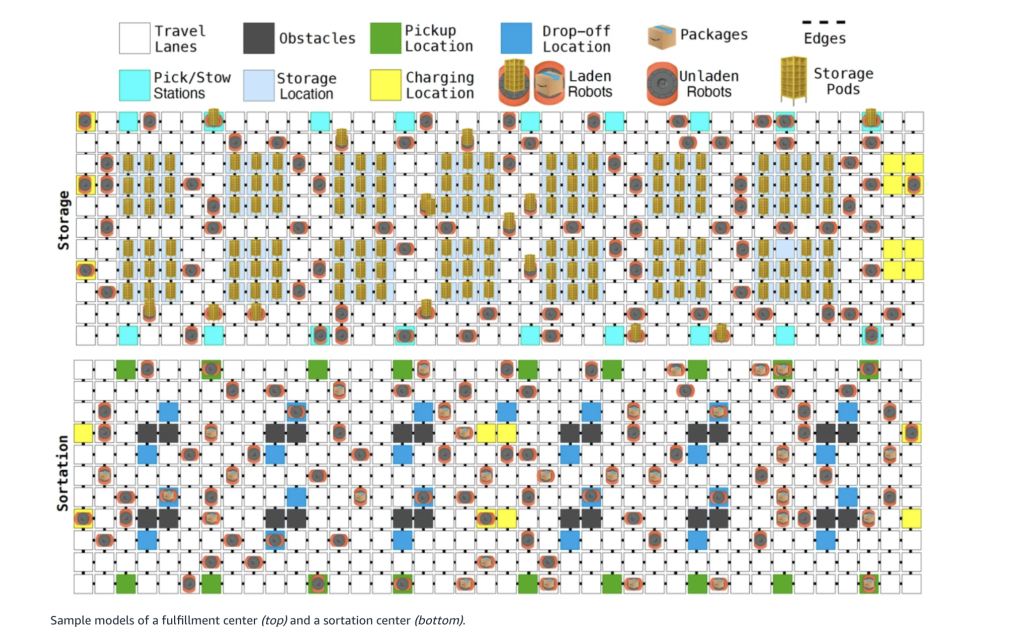

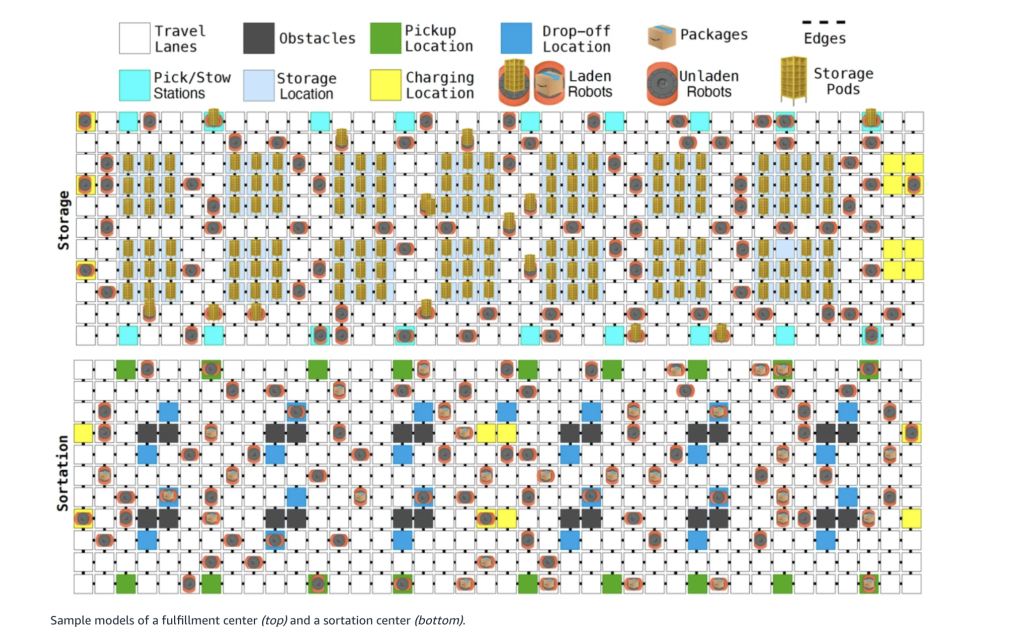

In fulfillment centers, robots transport inventory shelves to human workers, while in sortation facilities, they handle packages for delivery. With fleets numbering in the hundreds of thousands, challenges like traffic jams and deadlocks can slow operations. DeepFleet addresses these by forecasting robot trajectories and interactions, enabling proactive planning.

The models draw from diverse data across warehouse layouts, robot generations, and operational cycles, capturing emergent behaviors such as congestion waves. This data richness—spanning millions of robot-hours—allows DeepFleet to generalize across scenarios, much like how large language models adapt to new queries.

Exploring the DeepFleet Architectures

DeepFleet comprises four distinct architectures/models, each with unique inductive biases to model multi-robot dynamics:

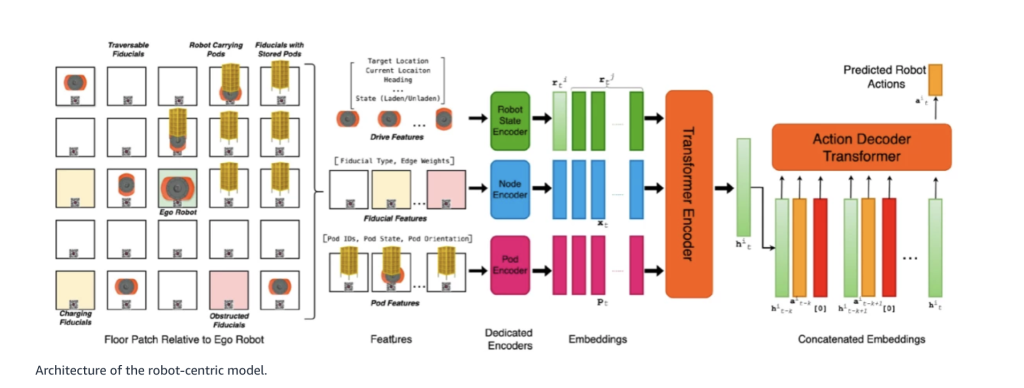

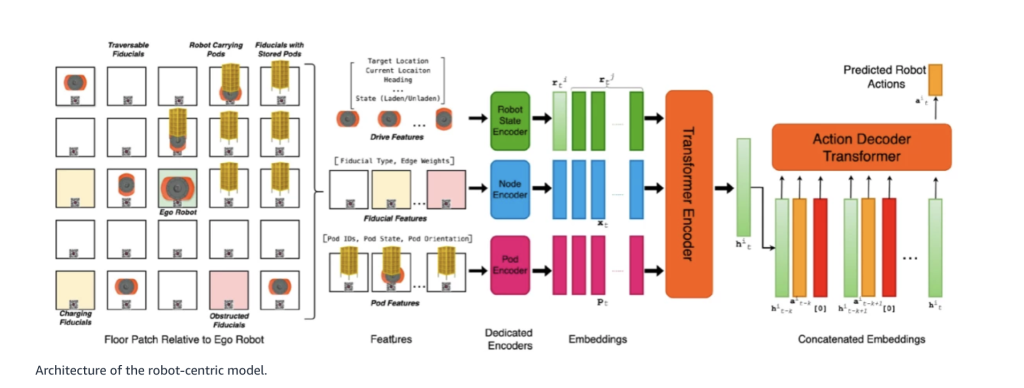

- Robot-Centric (RC) Model: This autoregressive transformer focuses on individual robots, using local neighborhood data (e.g., nearby robots, objects, and markers) to predict next actions. It processes asynchronous updates and pairs with a deterministic environment simulator for state evolution. With 97 million parameters, it excelled in evaluations, achieving the lowest errors in position and state predictions.

- Robot-Floor (RF) Model: Employing cross-attention, this model integrates robot states with global floor features like vertices and edges. It decodes actions synchronously, balancing local interactions and warehouse-wide context. At 840 million parameters, it performed strongly on timing predictions.

- Image-Floor (IF) Model: Treating the warehouse as a multi-channel image, this uses convolutional encoding for spatial features and transformers for temporal sequences. However, it underperformed, likely due to challenges in capturing pixel-level robot interactions at scale.

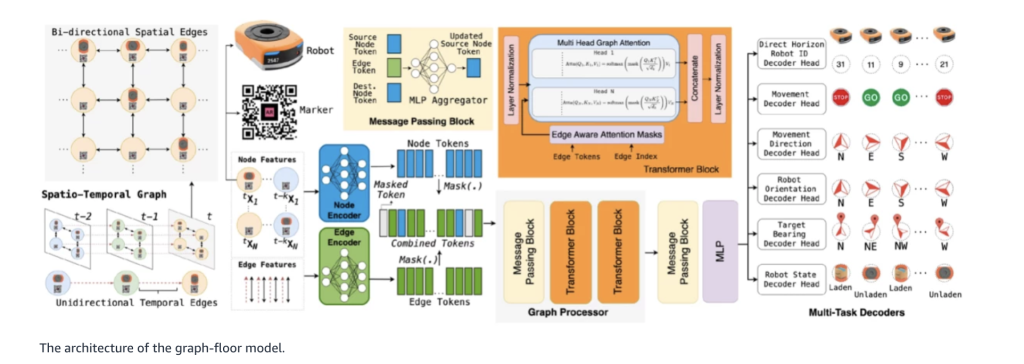

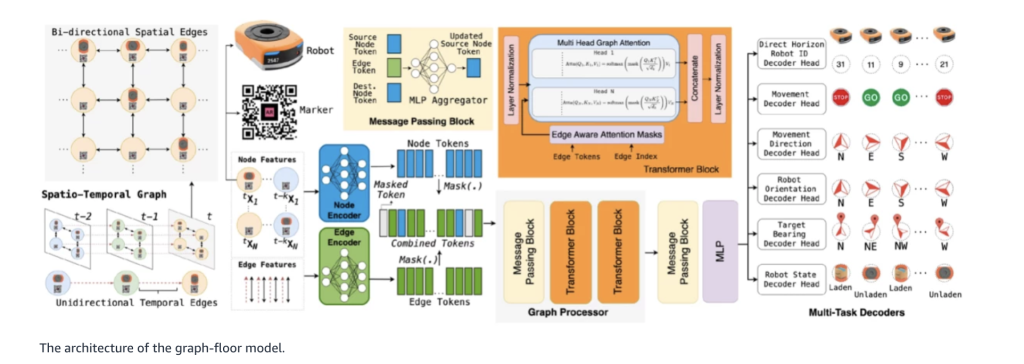

- Graph-Floor (GF) Model: Combining graph neural networks with transformers, this represents the floor as a spatiotemporal graph. It handles global relationships efficiently, predicting actions and states with just 13 million parameters, making it computationally lean yet competitive.

These designs vary in temporal (synchronous vs. event-based) and spatial (local vs. global) approaches, allowing Amazon to test what best suits large-scale forecasting.

Performance Insights and Scaling Potential

Evaluations on held-out warehouse data used metrics like dynamic time warping (DTW) for trajectory accuracy and congestion delay error (CDE) for operational realism. The RC model led overall, with DTW scores of 8.68 for position and 0.11% CDE, while GF offered strong results at lower complexity.

Scaling experiments confirmed that larger models and datasets reduce prediction losses, following patterns seen in other foundation models. For GF, extrapolations suggest a 1-billion-parameter version trained on 6.6 million episodes could optimize compute effectively.

This scalability is key, as Amazon’s vast robot fleet provides an unmatched data advantage. Early applications include congestion forecasting and adaptive routing, with potential for task assignment and deadlock prevention.

Real-World Impact on Operations

DeepFleet is already enhancing Amazon’s network, which spans over 300 facilities worldwide, including a recent deployment in Japan. By improving robot travel efficiency, it enables faster package processing and lower costs, directly benefiting customers.

Beyond efficiency, Amazon emphasizes workforce development, having upskilled over 700,000 employees since 2019 in robotics and AI-related roles. This integration creates safer jobs by offloading heavy tasks to machines.

Looking Ahead

As Amazon continues refining DeepFleet—focusing on RC, RF, and GF variants—the technology could redefine multi-robot systems in logistics. By leveraging AI to anticipate fleet behaviors, it moves beyond reactive control, paving the way for more autonomous, scalable operations. This innovation underscores how foundation models are extending from digital realms into physical automation, potentially transforming industries reliant on coordinated robotics.

Check out the Paper and Technical Blog. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.