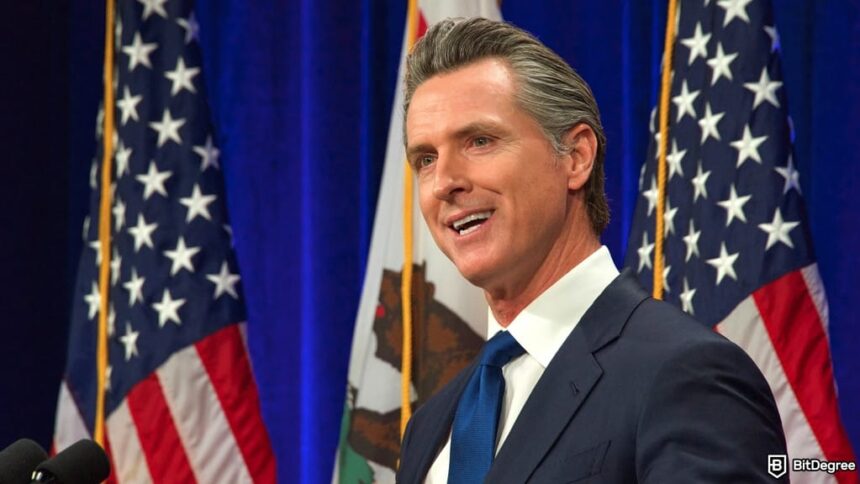

California Governor Gavin Newsom has introduced the country’s first law aimed at regulating artificial intelligence (AI) companion chatbots.

The approved bill, known as SB 243, creates new rules for companies that develop and operate these AI systems.

The bill places clear responsibilities on chatbot companies. Those companies, such as Meta, OpenAI, Replika, and Character AI, will now be held accountable if their platforms do not follow the new safety requirements.

Did you know?

Subscribe – We publish new crypto explainer videos every week!

What is a Crypto Bull Run? (Animated Explainer + Prediction)

These rules are designed to make sure AI companions are used in a way that does not cause harm.

Starting in January 2026, companies must add features that check a user’s age and display notices about potential risks linked to social interaction and chatbot use. Firms must also ensure users are aware that they are interacting with software, not a human being.

The law also requires companies to take action in serious situations. If someone appears to be thinking about suicide or self-harm, the chatbot must respond by offering crisis support details.

Companies must report how often these alerts are used and provide their safety plans to the state’s public health agency.

To protect minors, platforms must block explicit images generated by the chatbot from being shown to underage users and remind them to take breaks.

Penalties have also been increased for creating and selling deepfake content, with fines reaching up to $250,000 for each offense.

Recently, the US Senate passed the GAIN Act (Guaranteeing Access and Innovation for National Artificial Intelligence Act of 2026). What does the proposal cover? Read the full story.