In this tutorial, we’ll explore how to implement Chain-of-Thought (CoT) reasoning using the Mirascope library and Groq’s LLaMA 3 model. Rather than having the model jump straight to an answer, CoT reasoning encourages it to break the problem down into logical steps—much like how a human would solve it. This approach improves accuracy, transparency, and helps tackle complex, multi-step tasks more reliably. We’ll guide you through setting up the schema, defining step-by-step reasoning calls, generating final answers, and visualizing the thinking process in a structured way.

We’ll be asking the LLM a relative velocity question – “If a train leaves City A at 9:00 AM traveling at 60 km/h, and another train leaves City B (which is 300 km away from City A) at 10:00 AM traveling at 90 km/h toward City A, at what time will the trains meet?”

Installing the dependencies

!pip install "mirascope[groq]"

!pip install datetimeGroq API Key

For this tutorial, we require a Groq API key to make LLM calls. You can get one at https://console.groq.com/keys

Importing the libraries & defining a Pydantic schema

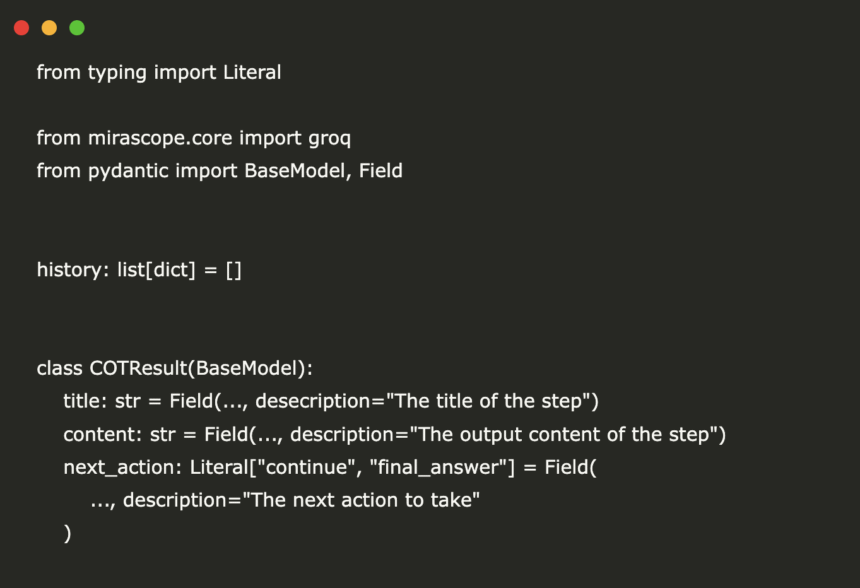

This section imports the required libraries and defines a COTResult Pydantic model. The schema structures each reasoning step with a title, content, and a next_action flag to indicate whether the model should continue reasoning or return the final answer.

from typing import Literal

from mirascope.core import groq

from pydantic import BaseModel, Field

history: list[dict] = []

class COTResult(BaseModel):

title: str = Field(..., desecription="The title of the step")

content: str = Field(..., description="The output content of the step")

next_action: Literal["continue", "final_answer"] = Field(

..., description="The next action to take"

)Defining Step-wise Reasoning and Final Answer Functions

These functions form the core of the Chain-of-Thought (CoT) reasoning workflow. The cot_step function allows the model to think iteratively by reviewing prior steps and deciding whether to continue or conclude. This enables deeper reasoning, especially for multi-step problems. The final_answer function consolidates all reasoning into a single, focused response, making the output clean and ready for end-user consumption. Together, they help the model approach complex tasks more logically and transparently.

@groq.call("llama-3.3-70b-versatile", json_mode=True, response_model=COTResult)

def cot_step(prompt: str, step_number: int, previous_steps: str) -> str:

return f"""

You are an expert AI assistant that explains your reasoning step by step.

For this step, provide a title that describes what you're doing, along with the content.

Decide if you need another step or if you're ready to give the final answer.

Guidelines:

- Use AT MOST 5 steps to derive the answer.

- Be aware of your limitations as an LLM and what you can and cannot do.

- In your reasoning, include exploration of alternative answers.

- Consider you may be wrong, and if you are wrong in your reasoning, where it would be.

- Fully test all other possibilities.

- YOU ARE ALLOWED TO BE WRONG. When you say you are re-examining

- Actually re-examine, and use another approach to do so.

- Do not just say you are re-examining.

IMPORTANT: Do not use code blocks or programming examples in your reasoning. Explain your process in plain language.

This is step number {step_number}.

Question: {prompt}

Previous steps:

{previous_steps}

"""

@groq.call("llama-3.3-70b-versatile")

def final_answer(prompt: str, reasoning: str) -> str:

return f"""

Based on the following chain of reasoning, provide a final answer to the question.

Only provide the text response without any titles or preambles.

Retain any formatting as instructed by the original prompt, such as exact formatting for free response or multiple choice.

Question: {prompt}

Reasoning:

{reasoning}

Final Answer:

"""Generating and Displaying Chain-of-Thought Responses

This section defines two key functions to manage the full Chain-of-Thought reasoning loop:

- generate_cot_response handles the iterative reasoning process. It sends the user query to the model step-by-step, tracks each step’s content, title, and response time, and stops when the model signals it has reached the final answer or after a maximum of 5 steps. It then calls final_answer to produce a clear conclusion based on the accumulated reasoning.

- display_cot_response neatly prints the step-by-step breakdown along with the time taken for each step, followed by the final answer and the total processing time.

Together, these functions help visualize how the model reasons through a complex prompt and allow for better transparency and debugging of multi-step outputs.

def generate_cot_response(

user_query: str,

) -> tuple[list[tuple[str, str, float]], float]:

steps: list[tuple[str, str, float]] = []

total_thinking_time: float = 0.0

step_count: int = 1

reasoning: str = ""

previous_steps: str = ""

while True:

start_time: datetime = datetime.now()

cot_result = cot_step(user_query, step_count, previous_steps)

end_time: datetime = datetime.now()

thinking_time: float = (end_time - start_time).total_seconds()

steps.append(

(

f"Step {step_count}: {cot_result.title}",

cot_result.content,

thinking_time,

)

)

total_thinking_time += thinking_time

reasoning += f"\n{cot_result.content}\n"

previous_steps += f"\n{cot_result.content}\n"

if cot_result.next_action == "final_answer" or step_count >= 5:

break

step_count += 1

# Generate final answer

start_time = datetime.now()

final_result: str = final_answer(user_query, reasoning).content

end_time = datetime.now()

thinking_time = (end_time - start_time).total_seconds()

total_thinking_time += thinking_time

steps.append(("Final Answer", final_result, thinking_time))

return steps, total_thinking_time

def display_cot_response(

steps: list[tuple[str, str, float]], total_thinking_time: float

) -> None:

for title, content, thinking_time in steps:

print(f"{title}:")

print(content.strip())

print(f"**Thinking time: {thinking_time:.2f} seconds**\n")

print(f"**Total thinking time: {total_thinking_time:.2f} seconds**")Running the Chain-of-Thought Workflow

The run function initiates the full Chain-of-Thought (CoT) reasoning process by sending a multi-step math word problem to the model. It begins by printing the user’s question, then uses generate_cot_response to compute a step-by-step reasoning trace. These steps, along with the total processing time, are displayed using display_cot_response.

Finally, the function logs both the question and the model’s final answer into a shared history list, preserving the full interaction for future reference or auditing. This function ties together all earlier components into a complete, user-facing reasoning flow.

def run() -> None:

question: str = "If a train leaves City A at 9:00 AM traveling at 60 km/h, and another train leaves City B (which is 300 km away from City A) at 10:00 AM traveling at 90 km/h toward City A, at what time will the trains meet?"

print("(User):", question)

# Generate COT response

steps, total_thinking_time = generate_cot_response(question)

display_cot_response(steps, total_thinking_time)

# Add the interaction to the history

history.append({"role": "user", "content": question})

history.append(

{"role": "assistant", "content": steps[-1][1]}

) # Add only the final answer to the history

# Run the function

run()Check out the Codes. All credit for this research goes to the researchers of this project.

Sponsorship Opportunity: Reach the most influential AI developers in US and Europe. 1M+ monthly readers, 500K+ community builders, infinite possibilities. [Explore Sponsorship]