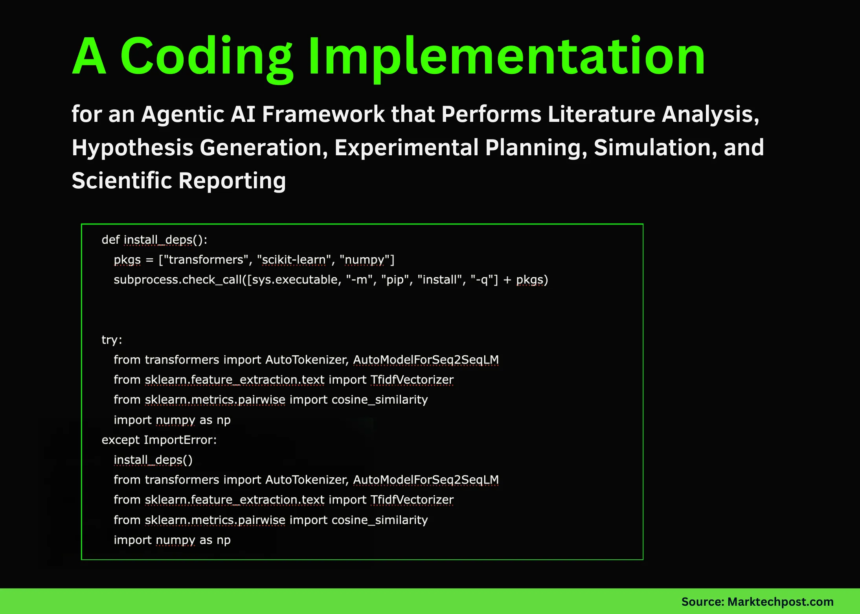

In this tutorial, we build a complete scientific discovery agent step by step and experience how each component works together to form a coherent research workflow. We begin by loading our literature corpus, constructing retrieval and LLM modules, and then assembling agents that search papers, generate hypotheses, design experiments, and produce structured reports. Through snippets mentioned below, we see how an agentic pipeline emerges naturally, allowing us to explore a scientific question from initial curiosity to a full analysis within a single, integrated system. Check out the FULL CODES here.

import sys, subprocess

def install_deps():

pkgs = ["transformers", "scikit-learn", "numpy"]

subprocess.check_call([sys.executable, "-m", "pip", "install", "-q"] + pkgs)

try:

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

except ImportError:

install_deps()

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

from dataclasses import dataclass

from typing import List, Dict, Any

np.random.seed(42)

LITERATURE = [

{"id": "P1","title": "Self-Supervised Protein Language Models for Structure Prediction","field": "computational biology",

"abstract": "We explore transformer-based protein language models trained on millions of sequences. The models learn residue-level embeddings that improve secondary structure prediction and stability estimation."},

{"id": "P2","title": "CRISPR Off-Target Detection Using Deep Learning","field": "genome editing",

"abstract": "We propose a convolutional neural network architecture for predicting CRISPR-Cas9 off-target effects directly from genomic sequences, achieving state-of-the-art accuracy on GUIDE-seq datasets."},

{"id": "P3","title": "Foundation Models for Scientific Equation Discovery","field": "scientific ML",

"abstract": "Large language models are combined with symbolic regression to recover governing equations from noisy experimental observations in physics and fluid dynamics."},

{"id": "P4","title": "Active Learning for Materials Property Optimization","field": "materials science",

"abstract": "We integrate Bayesian optimization with graph neural networks to actively select candidate materials that maximize target properties while reducing experimental cost."},

{"id": "P5","title": "Graph-Based Retrieval for Cross-Domain Literature Review","field": "NLP for science",

"abstract": "We construct a heterogeneous citation and concept graph over multi-domain scientific papers and show that graph-aware retrieval improves cross-domain literature exploration."},

]

corpus_texts = [p["abstract"] + " " + p["title"] for p in LITERATURE]

vectorizer = TfidfVectorizer(stop_words="english")

corpus_matrix = vectorizer.fit_transform(corpus_texts)

MODEL_NAME = "google/flan-t5-small"

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

model = AutoModelForSeq2SeqLM.from_pretrained(MODEL_NAME)

def generate_text(prompt: str, max_new_tokens: int = 256) -> str:

inputs = tokenizer(prompt, return_tensors="pt", truncation=True)

outputs = model.generate(**inputs, max_new_tokens=max_new_tokens, num_beams=4, early_stopping=True)

return tokenizer.decode(outputs[0], skip_special_tokens=True)We laid the foundation for our scientific agent by loading libraries, preparing the literature corpus, and initializing our language model. We build the TF-IDF vectorizer and embed all abstracts to later retrieve relevant papers. With the model loaded and data structured, we create the computational backbone for everything that follows. Check out the FULL CODES here.

@dataclass

class PaperHit:

paper: Dict[str, Any]

score: float

class LiteratureAgent:

def __init__(self, vectorizer, corpus_matrix, papers: List[Dict[str, Any]]):

self.vectorizer = vectorizer

self.corpus_matrix = corpus_matrix

self.papers = papers

def search(self, query: str, k: int = 3) -> List[PaperHit]:

q_vec = self.vectorizer.transform([query])

sims = cosine_similarity(q_vec, self.corpus_matrix)[0]

idxs = np.argsort(-sims)[:k]

hits = [PaperHit(self.papers[i], float(sims[i])) for i in idxs]

return hitsWe implement the literature-search component of our agent. We convert user queries into a vector space and identify the most relevant scientific papers using cosine similarity. Through this, we give our system the ability to ground its reasoning in the closest-matching prior work. Check out the FULL CODES here.

@dataclass

class ExperimentPlan:

system: str

hypothesis: str

variables: Dict[str, Any]

protocol: List[str]

@dataclass

class ExperimentResult:

plan: ExperimentPlan

metrics: Dict[str, float]

class ExperimentAgent:

def design_experiment(self, question: str, hypothesis: str, hits: List[PaperHit]) -> ExperimentPlan:

top_field = hits[0].paper["field"] if hits else "computational science"

protocol = [

f"Construct dataset combining ideas from: {', '.join(h.paper['id'] for h in hits)}.",

"Split data into train/validation/test.",

"Compare baseline model vs. augmented model implementing the hypothesis.",

"Evaluate using appropriate metrics and perform ablation analysis.",

]

variables = {

"baseline_model": "sequence CNN",

"augmented_model": "protein language model + CNN",

"n_train_samples": 5000,

"n_validation_samples": 1000,

"metric": "AUROC",

}

system = f"{top_field} system related to: {question}"

return ExperimentPlan(system=system, hypothesis=hypothesis, variables=variables, protocol=protocol)

def run_experiment(self, plan: ExperimentPlan) -> ExperimentResult:

base = 0.78 + 0.02 * np.random.randn()

gain = abs(0.05 + 0.01 * np.random.randn())

metrics = {

"baseline_AUROC": round(base, 3),

"augmented_AUROC": round(base + gain, 3),

"estimated_gain": round(gain, 3),

}

return ExperimentResult(plan=plan, metrics=metrics)We design and simulate experiments based on the retrieved literature and the generated hypothesis. We automatically define variables, build a protocol, and generate synthetic metrics that imitate the dynamics of a real scientific evaluation. This lets us move from theoretical ideas to an actionable experimental plan. Check out the FULL CODES here.

class ReportAgent:

def write_report(self, question: str, hits: List[PaperHit], plan: ExperimentPlan, result: ExperimentResult) -> str:

related_work = "\n".join(f"- {h.paper['title']} ({h.paper['field']})" for h in hits)

protocol_str = "\n".join(f"- {step}" for step in plan.protocol)

prompt = f"""

You are an AI research assistant writing a concise research-style report.

Research question:

{question}

Hypothesis:

{plan.hypothesis}

Relevant prior work:

{related_work}

Planned experiment:

System: {plan.system}

Variables: {plan.variables}

Protocol:

{protocol_str}

Simulated results:

{result.metrics}

Write a clear report with the following sections:

1. Background

2. Proposed Approach

3. Experimental Setup

4. Results and Discussion

5. Limitations and Future Work

"""

return generate_text(prompt.strip(), max_new_tokens=320)We generate a full research-style report using the LLM. We assemble the hypothesis, protocol, results, and related work into a structured document with clearly defined sections. This allows us to turn the pipeline’s raw outputs into polished scientific communication. Check out the FULL CODES here.

class ScientificAgent:

def __init__(self):

self.lit_agent = LiteratureAgent(vectorizer, corpus_matrix, LITERATURE)

self.exp_agent = ExperimentAgent()

self.report_agent = ReportAgent()

def propose_hypothesis(self, question: str, hits: List[PaperHit]) -> str:

context = " ".join(h.paper["abstract"] for h in hits)

prompt = f"""

You are an AI scientist. Given a research question and related abstracts,

propose a single, testable hypothesis in 2-3 sentences.

Research question:

{question}

Related abstracts:

{context}

"""

return generate_text(prompt.strip(), max_new_tokens=96)

def run_pipeline(self, question: str) -> str:

hits = self.lit_agent.search(question, k=3)

hypothesis = self.propose_hypothesis(question, hits)

plan = self.exp_agent.design_experiment(question, hypothesis, hits)

result = self.exp_agent.run_experiment(plan)

report = self.report_agent.write_report(question, hits, plan, result)

return report

if __name__ == "__main__":

research_question = (

"How can protein language model embeddings improve CRISPR off-target "

"prediction compared to sequence-only CNN baselines?"

)

agent = ScientificAgent()

final_report = agent.run_pipeline(research_question)

print(final_report)We orchestrate the entire pipeline, searching the literature, generating a hypothesis, designing the experiment, running the simulation, and writing the report. We then execute the system on a real research question and observe the complete workflow in action. This step brings all the modules together into a unified scientific agent.

In conclusion, we see how a compact codebase can evolve into a functioning AI co-researcher capable of searching, reasoning, simulating, and summarizing. We understand how each snippet contributes to the full pipeline and how agentic components amplify one another when combined. Also, we place ourselves in a strong position to extend the agent with richer literature sources, more realistic models, and more sophisticated experimental logic, pushing our scientific exploration further with every iteration.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.