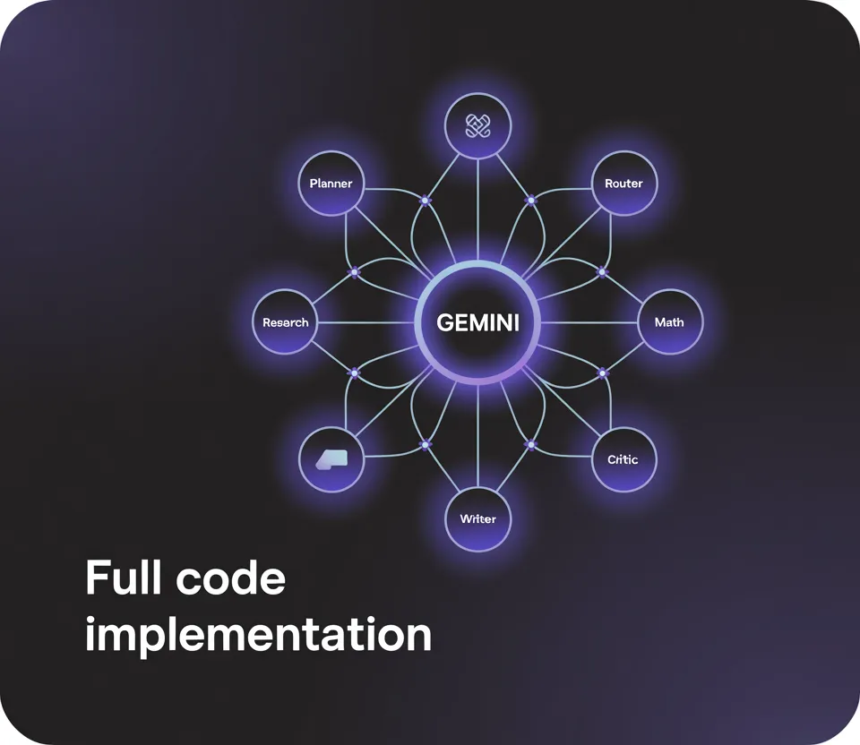

In this tutorial, we implement an advanced graph-based AI agent using the GraphAgent framework and the Gemini 1.5 Flash model. We define a directed graph of nodes, each responsible for a specific function: a planner to break down the task, a router to control flow, research and math nodes to provide external evidence and computation, a writer to synthesize the answer, and a critic to validate and refine the output. We integrate Gemini through a wrapper that handles structured JSON prompts, while local Python functions act as tools for safe math evaluation and document search. By executing this pipeline end-to-end, we demonstrate how reasoning, retrieval, and validation are modularized within a single cohesive system. Check out the FULL CODES here.

import os, json, time, ast, math, getpass

from dataclasses import dataclass, field

from typing import Dict, List, Callable, Any

import google.generativeai as genai

try:

import networkx as nx

except ImportError:

nx = NoneWe begin by importing core Python libraries for data handling, timing, and safe evaluation, along with dataclasses and typing helpers to structure our state. We also load the google.generativeai client to access Gemini and, optionally, NetworkX for graph visualization. Check out the FULL CODES here.

def make_model(api_key: str, model_name: str = "gemini-1.5-flash"):

genai.configure(api_key=api_key)

return genai.GenerativeModel(model_name, system_instruction=(

"You are GraphAgent, a principled planner-executor. "

"Prefer structured, concise outputs; use provided tools when asked."

))

def call_llm(model, prompt: str, temperature=0.2) -> str:

r = model.generate_content(prompt, generation_config={"temperature": temperature})

return (r.text or "").strip()We define a helper to configure and return a Gemini model with a custom system instruction, and another function that calls the LLM with a prompt while controlling temperature. We use this setup to ensure our agent receives structured, concise outputs consistently. Check out the FULL CODES here.

def safe_eval_math(expr: str) -> str:

node = ast.parse(expr, mode="eval")

allowed = (ast.Expression, ast.BinOp, ast.UnaryOp, ast.Num, ast.Constant,

ast.Add, ast.Sub, ast.Mult, ast.Div, ast.Pow, ast.Mod,

ast.USub, ast.UAdd, ast.FloorDiv, ast.AST)

def check(n):

if not isinstance(n, allowed): raise ValueError("Unsafe expression")

for c in ast.iter_child_nodes(n): check(c)

check(node)

return str(eval(compile(node, "We implement two key tools for the agent: a safe math evaluator that parses and checks arithmetic expressions with ast before execution, and a simple document search that retrieves the most relevant snippets from a small in-memory corpus. We use these to give the agent reliable computation and retrieval capabilities without external dependencies. Check out the FULL CODES here.

@dataclass

class State:

task: str

plan: str = ""

scratch: List[str] = field(default_factory=list)

evidence: List[str] = field(default_factory=list)

result: str = ""

step: int = 0

done: bool = False

def node_plan(state: State, model) -> str:

prompt = f"""Plan step-by-step to solve the user task.

Task: {state.task}

Return JSON: {{"subtasks": ["..."], "tools": {{"search": true/false, "math": true/false}}, "success_criteria": ["..."]}}"""

js = call_llm(model, prompt)

try:

plan = json.loads(js[js.find("{"): js.rfind("}")+1])

except Exception:

plan = {"subtasks": ["Research", "Synthesize"], "tools": {"search": True, "math": False}, "success_criteria": ["clear answer"]}

state.plan = json.dumps(plan, indent=2)

state.scratch.append("PLAN:\n"+state.plan)

return "route"

def node_route(state: State, model) -> str:

prompt = f"""You are a router. Decide next node.

Context scratch:\n{chr(10).join(state.scratch[-5:])}

If math needed -> 'math', if research needed -> 'research', if ready -> 'write'.

Return one token from [research, math, write]. Task: {state.task}"""

choice = call_llm(model, prompt).lower()

if "math" in choice and any(ch.isdigit() for ch in state.task):

return "math"

if "research" in choice or not state.evidence:

return "research"

return "write"

def node_research(state: State, model) -> str:

prompt = f"""Generate 3 focused search queries for:

Task: {state.task}

Return as JSON list of strings."""

qjson = call_llm(model, prompt)

try:

queries = json.loads(qjson[qjson.find("["): qjson.rfind("]")+1])[:3]

except Exception:

queries = [state.task, "background "+state.task, "pros cons "+state.task]

hits = []

for q in queries:

hits.extend(search_docs(q, k=2))

state.evidence.extend(list(dict.fromkeys(hits)))

state.scratch.append("EVIDENCE:\n- " + "\n- ".join(hits))

return "route"

def node_math(state: State, model) -> str:

prompt = "Extract a single arithmetic expression from this task:\n"+state.task

expr = call_llm(model, prompt)

expr = "".join(ch for ch in expr if ch in "0123456789+-*/().%^ ")

try:

val = safe_eval_math(expr)

state.scratch.append(f"MATH: {expr} = {val}")

except Exception as e:

state.scratch.append(f"MATH-ERROR: {expr} ({e})")

return "route"

def node_write(state: State, model) -> str:

prompt = f"""Write the final answer.

Task: {state.task}

Use the evidence and any math results below, cite inline like [1],[2].

Evidence:\n{chr(10).join(f'[{i+1}] '+e for i,e in enumerate(state.evidence))}

Notes:\n{chr(10).join(state.scratch[-5:])}

Return a concise, structured answer."""

draft = call_llm(model, prompt, temperature=0.3)

state.result = draft

state.scratch.append("DRAFT:\n"+draft)

return "critic"

def node_critic(state: State, model) -> str:

prompt = f"""Critique and improve the answer for factuality, missing steps, and clarity.

If fix needed, return improved answer. Else return 'OK'.

Answer:\n{state.result}\nCriteria:\n{state.plan}"""

crit = call_llm(model, prompt)

if crit.strip().upper() != "OK" and len(crit) > 30:

state.result = crit.strip()

state.scratch.append("REVISED")

state.done = True

return "end"

NODES: Dict[str, Callable[[State, Any], str]] = {

"plan": node_plan, "route": node_route, "research": node_research,

"math": node_math, "write": node_write, "critic": node_critic

}

def run_graph(task: str, api_key: str) -> State:

model = make_model(api_key)

state = State(task=task)

cur = "plan"

max_steps = 12

while not state.done and state.step < max_steps:

state.step += 1

nxt = NODES[cur](state, model)

if nxt == "end": break

cur = nxt

return state

def ascii_graph():

return """

START -> plan -> route -> (research <-> route) & (math <-> route) -> write -> critic -> END

"""

We define a typed State dataclass to persist the task, plan, evidence, scratch notes, and control flags as the graph executes. We implement node functions, a planner, a router, research, math, a writer, and a critic. These functions mutate the state and return the next node label. We then register them in NODES and iterate in run_graph until done. We also expose ascii_graph() to visualize the control flow we follow as we route between research/math and finalize with a critique. Check out the FULL CODES here.

if __name__ == "__main__":

key = os.getenv("GEMINI_API_KEY") or getpass.getpass("🔐 Enter GEMINI_API_KEY: ")

task = input("📝 Enter your task: ").strip() or "Compare solar vs wind for reliability; compute 5*7."

t0 = time.time()

state = run_graph(task, key)

dt = time.time() - t0

print("\n=== GRAPH ===", ascii_graph())

print(f"\n✅ Result in {dt:.2f}s:\n{state.result}\n")

print("---- Evidence ----")

print("\n".join(state.evidence))

print("\n---- Scratch (last 5) ----")

print("\n".join(state.scratch[-5:]))We define the program’s entry point: we securely read the Gemini API key, take a task as input, and then run the graph through run_graph. We measure execution time, print the ASCII graph of the workflow, display the final result, and also output supporting evidence and the last few scratch notes for transparency. Check out the FULL CODES here.

In conclusion, we demonstrate how a graph-structured agent enables the design of deterministic workflows around a probabilistic LLM. We observe how the planner node enforces task decomposition, the router dynamically selects between research and math, and the critic provides iterative improvement for factuality and clarity. Gemini acts as the central reasoning engine, while the graph nodes supply structure, safety checks, and transparent state management. We conclude with a fully functional agent that showcases the benefits of combining graph orchestration with a modern LLM, enabling extensions such as custom toolchains, multi-turn memory, or parallel node execution in more complex deployments.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.