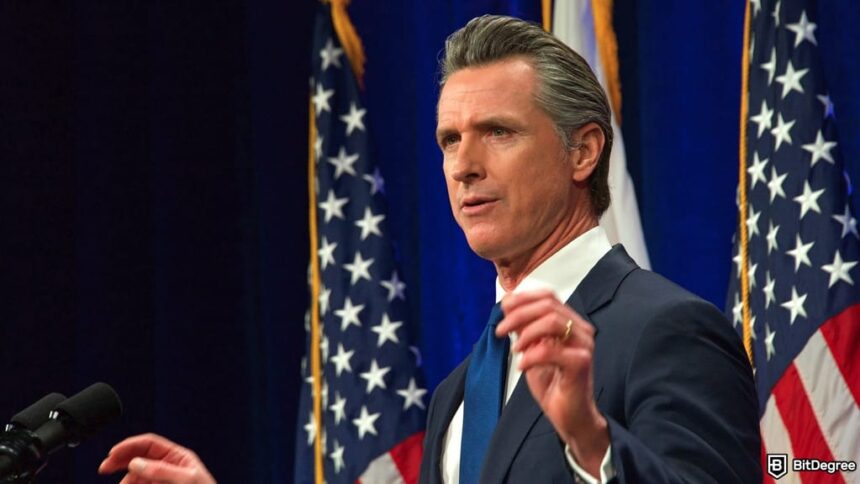

A proposal in California that would regulate artificial intelligence (AI) chatbots designed for personal interaction has passed the state legislature and awaits approval from Governor Gavin Newsom.

Known as Senate Bill 243, the legislation received backing from both Democratic and Republican lawmakers. Newsom must decide whether to approve or reject it by October 12.

If enacted, the law would take effect on January 1, 2026. This would mark the first instance of a US state requiring companies that develop or run AI companions to follow specific safety practices.

Did you know?

Subscribe – We publish new crypto explainer videos every week!

What is Crypto Arbitrage? (Risks & Tips Explained With Animation)

The bill outlines several new responsibilities for companies offering AI companions, programs that simulate human-like responses to fulfill users’ social or emotional needs.

One key requirement is that these systems must frequently notify users, especially minors, that they are communicating with a machine. For users under 18, these reminders would appear every three hours, along with prompts to take breaks.

Additionally, companies would need to report annually on how their systems are being used. These reports, required starting in July 2027, would need to include information on how often users are directed to mental health or emergency services.

Under the proposed law, individuals who feel they have been harmed due to a company’s failure to follow the rules would be allowed to sue. They could seek court-ordered changes, compensation (up to $1,000 per violation), and legal costs.

Recently, the US Federal Trade Commission (FTC) initiated a formal review into the potential impact of AI chatbots on children and teenagers. What did the agency say? Read the full story.