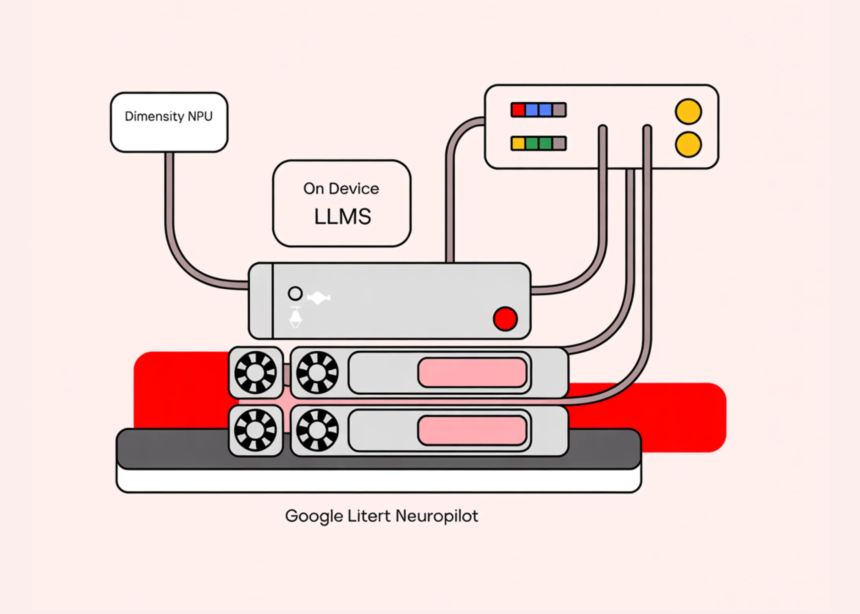

The new LiteRT NeuroPilot Accelerator from Google and MediaTek is a concrete step toward running real generative models on phones, laptops, and IoT hardware without shipping every request to a data center. It takes the existing LiteRT runtime and wires it directly into MediaTek’s NeuroPilot NPU stack, so developers can deploy LLMs and embedding models with a single API surface instead of per chip custom code.

What is LiteRT NeuroPilot Accelerator?

LiteRT is the successor of TensorFlow Lite. It is a high performance runtime that sits on device, runs models in .tflite FlatBuffer format, and can target CPU, GPU and now NPU backends through a unified hardware acceleration layer.

LiteRT NeuroPilot Accelerator is the new NPU path for MediaTek hardware. It replaces the older TFLite NeuroPilot delegate with a direct integration to the NeuroPilot compiler and runtime. Instead of treating the NPU as a thin delegate, LiteRT now uses a Compiled Model API that understands Ahead of Time (AOT) compilation and on device compilation, and exposes both through the same C++ and Kotlin APIs.

On the hardware side, the integration currently targets MediaTek Dimensity 7300, 8300, 9000, 9200, 9300 and 9400 SoCs, which together cover a large part of the Android mid range and flagship device space.

Why Developers Care, Unified Workflow For Fragmented NPUs??

Historically, on device ML stacks were CPU and GPU first. NPU SDKs shipped as vendor specific toolchains that required separate compilation flows per SoC, custom delegates, and manual runtime packaging. The result was a combinatorial explosion of binaries and a lot of device specific debugging.

LiteRT NeuroPilot Accelerator replaces that with a three step workflow that is the same regardless of which MediaTek NPU is present:

- Convert or load a

.tflitemodel as usual. - Optionally use the LiteRT Python tools to run AOT compilation and produce an AI Pack that is tied to one or more target SoCs.

- Ship the AI Pack through Play for On-device AI (PODAI), then select

Accelerator.NPUat runtime. LiteRT handles device targeting, runtime loading, and falls back to GPU or CPU if the NPU is not available.

For you as an engineer, the main change is that device targeting logic moves into a structured configuration file and Play delivery, while the app code mostly interacts with CompiledModel and Accelerator.NPU.

AOT and on device compilation are both supported. AOT compiles for a known SoC ahead of time and is recommended for larger models because it removes the cost of compiling on the user device. On device compilation is better for small models and generic .tflite distribution, at the cost of higher first run latency. The blog shows that for a model such as Gemma-3-270M, pure on device compilation can take more than 1 minute, which makes AOT the realistic option for production LLM use.

Gemma, Qwen, And Embedding Models On MediaTek NPU

The stack is built around open weight models rather than a single proprietary NLU path. Google and MediaTek list explicit, production oriented support for:

- Qwen3 0.6B, for text generation in markets such as mainland China.

- Gemma-3-270M, a compact base model that is easy to fine tune for tasks like sentiment analysis and entity extraction.

- Gemma-3-1B, a multilingual text only model for summarization and general reasoning.

- Gemma-3n E2B, a multimodal model that handles text, audio and vision for things like real time translation and visual question answering.

- EmbeddingGemma 300M, a text embedding model for retrieval augmented generation, semantic search and classification.

On the latest Dimensity 9500, running on a Vivo X300 Pro, the Gemma 3n E2B variant reaches more than 1600 tokens per second in prefill and 28 tokens per second in decode at a 4K context length when executed on the NPU.

For text generation use cases, LiteRT-LM sits on top of LiteRT and exposes a stateful engine with a text in text out API. A typical C++ flow is to create ModelAssets, build an Engine with litert::lm::Backend::NPU, then create a Session and call GenerateContent per conversation. For embedding workloads, EmbeddingGemma uses the lower level LiteRT CompiledModel API in a tensor in tensor out configuration, again with the NPU selected through hardware accelerator options.

Developer Experience, C++ Pipeline And Zero Copy Buffers

LiteRT introduces a new C++ API that replaces the older C entry points and is designed around explicit Environment, Model, CompiledModel and TensorBuffer objects.

For MediaTek NPUs, this API integrates tightly with Android’s AHardwareBuffer and GPU buffers. You can construct input TensorBuffer instances directly from OpenGL or OpenCL buffers with TensorBuffer::CreateFromGlBuffer, which lets image processing code feed NPU inputs without an intermediate copy through CPU memory. This is important for real time camera and video processing where multiple copies per frame quickly saturate memory bandwidth.

A typical high level C++ path on device looks like this, omitting error handling for clarity:

// Load model compiled for NPU

auto model = Model::CreateFromFile("model.tflite");

auto options = Options::Create();

options->SetHardwareAccelerators(kLiteRtHwAcceleratorNpu);

// Create compiled model

auto compiled = CompiledModel::Create(*env, *model, *options);

// Allocate buffers and run

auto input_buffers = compiled->CreateInputBuffers();

auto output_buffers = compiled->CreateOutputBuffers();

input_buffers[0].Write(input_span);

compiled->Run(input_buffers, output_buffers);

output_buffers[0].Read(output_span);

The same Compiled Model API is used whether you are targeting CPU, GPU or the MediaTek NPU, which reduces the amount of conditional logic in application code.

Key Takeaways

- LiteRT NeuroPilot Accelerator is the new, first class NPU integration between LiteRT and MediaTek NeuroPilot, replacing the old TFLite delegate and exposing a unified Compiled Model API with AOT and on device compilation on supported Dimensity SoCs.

- The stack targets concrete open weight models, including Qwen3-0.6B, Gemma-3-270M, Gemma-3-1B, Gemma-3n-E2B and EmbeddingGemma-300M, and runs them through LiteRT and LiteRT LM on MediaTek NPUs with a single accelerator abstraction.

- AOT compilation is strongly recommended for LLMs, for example Gemma-3-270M can take more than 1 minute to compile on device, so production deployments should compile once in the pipeline and ship AI Packs via Play for On device AI.

- On a Dimensity 9500 class NPU, Gemma-3n-E2B can reach more than 1600 tokens per second in prefill and 28 tokens per second in decode at 4K context, with measured throughput up to 12 times CPU and 10 times GPU for LLM workloads.

- For developers, the C++ and Kotlin LiteRT APIs provide a common path to select

Accelerator.NPU, manage compiled models and use zero copy tensor buffers, so CPU, GPU and MediaTek NPU targets can share one code path and one deployment workflow.

Check out the Docs and Technical details. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.