Introduction

Wearable devices are transforming health monitoring by enabling continuous collection of physiological and behavioral signals such as heart rate, activity, temperature, and skin conductance. However, the real-world data that these devices generate is highly prone to missingness due to sensor failures, device removal, charging, motion artifacts, battery-saving modes, and other interruptions. This presents a significant challenge for self-supervised learning (SSL) and foundation models, which typically expect complete, regular data streams. Past solutions often relied on data imputation or discarding incomplete instances, which risks introducing bias or wasting valuable information.

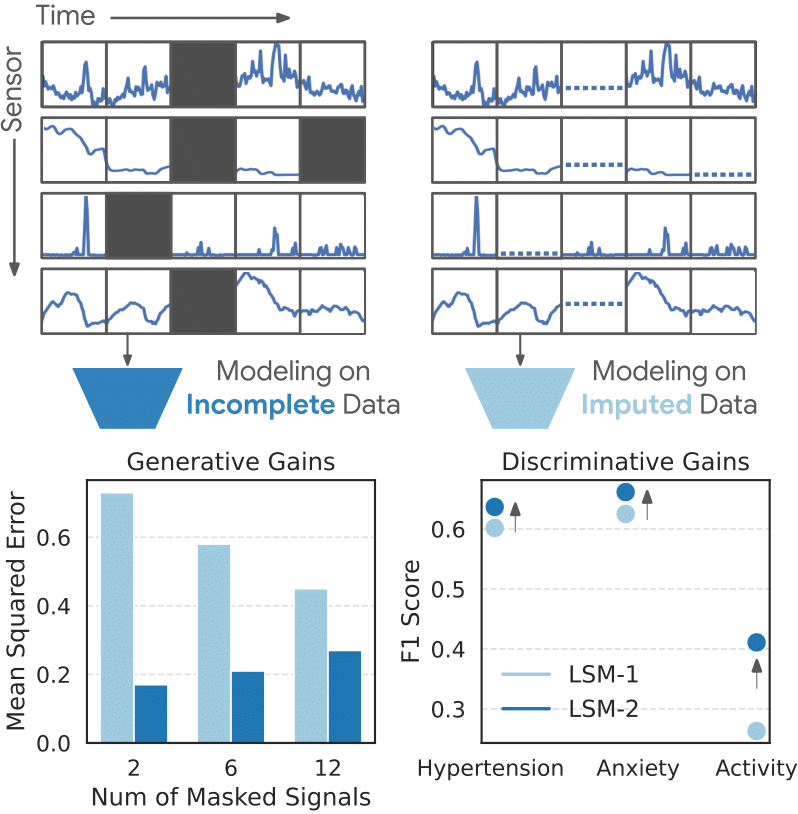

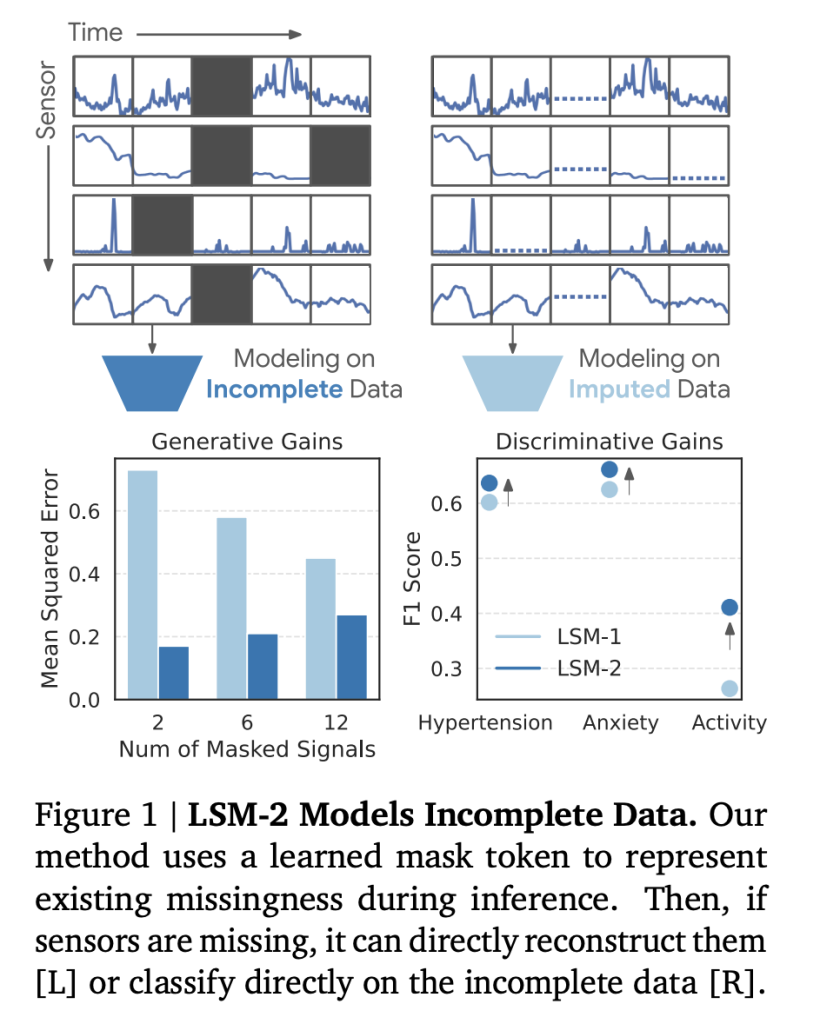

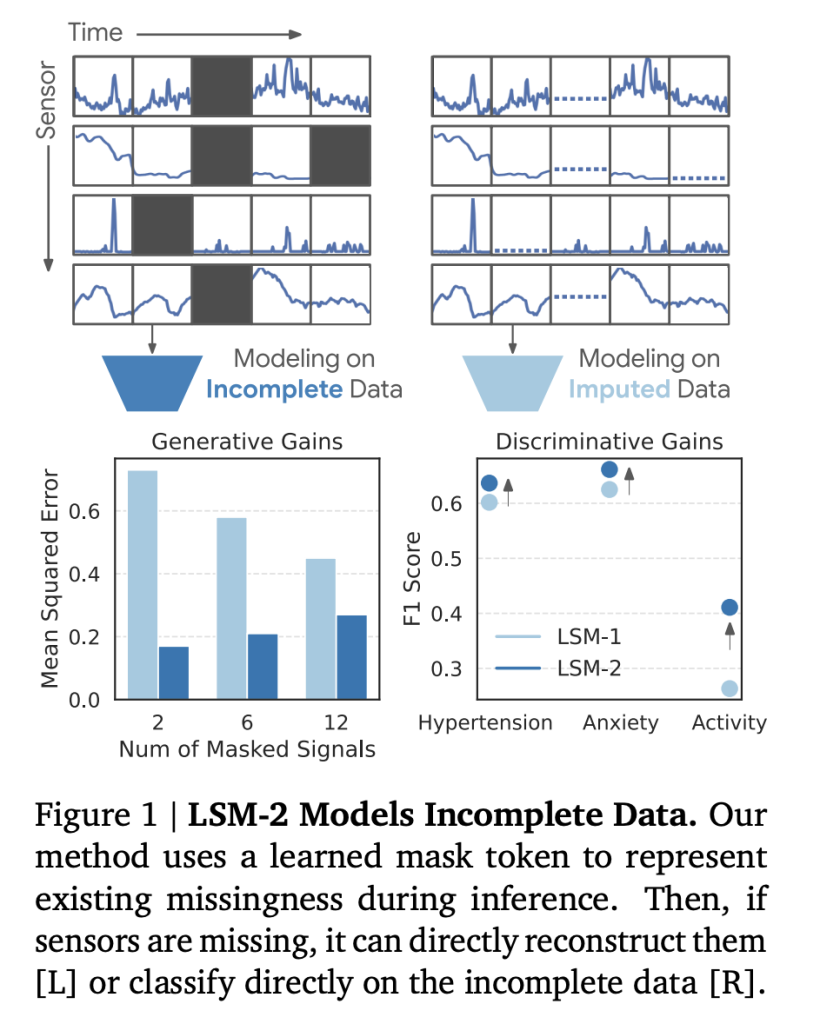

A team of researchers from Google DeepMind introduced LSM-2 (Large Sensor Model 2) framework—accompanied by the new Adaptive and Inherited Masking (AIM) strategy—addresses these issues directly, learning robust representations from incomplete wearable sensor data without explicit imputation. Below, we examine the technical innovations, empirical results, and key insights from this advancement.

The Challenge: Wearable Data Missingness

- Data Fragmentation: In a large-scale dataset of 1.6 million day-long (1440-minute) wearable data samples, 0% of the samples were fully complete; missingness is ubiquitous and often structured into long gaps, not simple random dropouts.

- Missingness Modes: Common causes include:

- Device off (charging or not worn)

- Selective sensor deactivation (power-saving or operation-specific)

- Motion artifacts or environmental noise

- Out-of-range or physiologically impossible readings filtered out during preprocessing

- Impact on Modeling: Many clinically-relevant physiological patterns (e.g., circadian rhythms, heart rate variability) require analysis of long sequences—where missingness is nearly guaranteed.

Adaptive and Inherited Masking (AIM): Technical Approach

Key Concepts

AIM integrates two masking types for robust learning:

- Inherited Mask: Marks tokens corresponding to real missingness in the sensor data

- Artificial Mask: Randomly masks observed tokens to provide reconstruction targets for self-supervised pretraining

These masks are unioned and handled by a transformer-based encoder-decoder structure, enabling the model to:

- Learn directly from non-imputed, incomplete data

- Adjust dynamically to real-world missingness during inference

- Produce representations robust to both partial and systematic data gaps

Masking Strategies for Pretraining

- Random Imputation: Dropping 80% of tokens simulating sensor noise

- Temporal Slices: Dropping 50% of temporal windows (all sensors missing during random periods)

- Sensor Slices: Dropping 50% of sensor channels across the entire day (modeling selective sensor off periods)

AIM combines the efficiency of dropout masking (removal from computation) and the flexibility of attention masking (support for dynamically-varying missingness), allowing the model to scale to long input sequences (day-long, >3,000 tokens).

Dataset and Pretraining Details

- Scale: 40 million hours of day-long, multimodal sensor data, collected from 60,440 participants between March and May 2024.

- Sensors: Photoplethysmography (PPG), accelerometer, electrodermal activity (EDA), skin temperature, and altimeter. Each device contributed minutely aggregated features across a 24-hour window.

- Demographic Diversity: Participants across a wide range of ages (18–96), genders, and BMI classes.

- Downstream Labeled Data:

- Metabolic Study (hypertension, anxiety prediction; n=1,250 labeled users)

- Activity Recognition (20 activity classes, 104,086 events).

Evaluation and Results

Downstream Tasks

AIM-based LSM-2 was assessed on:

- Classification: Binary hypertension, anxiety, and 20-class activity recognition

- Regression: Age and BMI

- Generative: Recovery of missing sensor data (random imputation, temporal/signal gaps)

Quantitative Results

| Task | Metric | Best LSM-1 | LSM-2 w/ AIM | Improvement |

|---|---|---|---|---|

| Hypertension | F1 | 0.640 | 0.651 | +1.7% |

| Activity Recognition | F1 | 0.470 | 0.474 | +0.8% |

| BMI (regression) | Corr | 0.667 | 0.673 | +1.0% |

| Random Imputation (80%) | MSE (↓) | 0.30 | 0.20 | +33% lower error |

| 2-signal Recovery | MSE (↓) | 0.73 | 0.17 | +77% lower error |

- Robustness to Targeted Missingness: When specific sensors or time windows were artificially removed, LSM-2 with AIM experienced 73% smaller performance drops (on average) compared to LSM-1. For example, F1 loss after removing accelerometry for activity recognition was -57% for LSM-2, as opposed to -71% for LSM-1, and LSM-2 retained +47% higher absolute F1 after ablation.

- Clinical Coherence: The model’s performance drop matched domain expectations. Nighttime biosignal removal significantly reduced hypertension/anxiety prediction accuracy (reflecting real-world diagnostic value of nocturnal data).

- Scaling: LSM-2 exhibited better scaling than LSM-1 in terms of subjects, data, compute, and model size, with no saturation observed in performance gains.

Technical Insights

- Direct Handling of Real-World Missingness: LSM-2 is the first wearable foundation model trained and evaluated directly on incomplete data, without explicit imputation.

- Hybrid Masking Mechanism: Adaptive and inherited masking achieves both computational efficiency (via dropout removal) and flexibility (via attention masking).

- Generalizable Embeddings: Even with a frozen backbone and simple linear probes, LSM-2 achieves state-of-the-art results in both clinical/person-level and event-level tasks, outperforming supervised and contrastive SSL baselines.

- Generative and Discriminative Power: LSM-2 is the only evaluated model capable of both reconstructing missing signals and generating embeddings applicable across various downstream tasks, suggesting utility for real-world medical and behavioral monitoring applications.

Conclusion

LSM-2 with Adaptive and Inherited Masking presents a major step forward for deploying AI-driven health insights using real-world wearable sensor data. By directly embracing ubiquitous, structured missingness, and unifying generative and discriminative capabilities under one efficient and robust foundation model, this approach lays crucial groundwork for the future of wearable and health AI in realistic, imperfect data environments.

Check out the Paper and Technical details. All credit for this research goes to the researchers of this project.

Meet the AI Dev Newsletter read by 40k+ Devs and Researchers from NVIDIA, OpenAI, DeepMind, Meta, Microsoft, JP Morgan Chase, Amgen, Aflac, Wells Fargo and 100s more [SUBSCRIBE NOW]