In this tutorial, we build a fully local, API-free agentic storytelling system using Griptape and a lightweight Hugging Face model. We walk through creating an agent with tool-use abilities, generating a fictional world, designing characters, and orchestrating a multi-stage workflow that produces a coherent short story. By dividing the implementation into modular snippets, we can clearly understand each component as it comes together into an end-to-end creative pipeline. Check out the FULL CODES here.

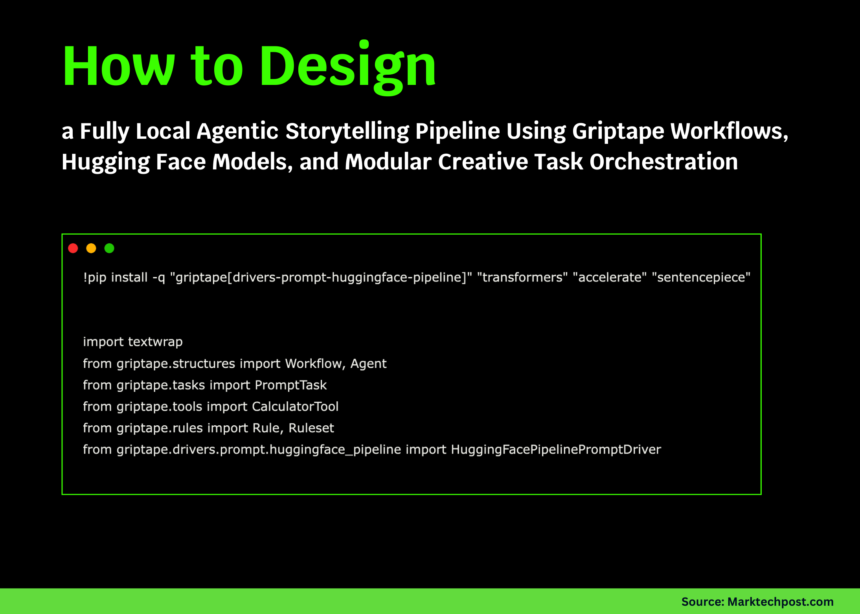

!pip install -q "griptape[drivers-prompt-huggingface-pipeline]" "transformers" "accelerate" "sentencepiece"

import textwrap

from griptape.structures import Workflow, Agent

from griptape.tasks import PromptTask

from griptape.tools import CalculatorTool

from griptape.rules import Rule, Ruleset

from griptape.drivers.prompt.huggingface_pipeline import HuggingFacePipelinePromptDriver

local_driver = HuggingFacePipelinePromptDriver(

model="TinyLlama/TinyLlama-1.1B-Chat-v1.0",

max_tokens=256,

)

def show(title, content):

print(f"\n{'='*20} {title} {'='*20}")

print(textwrap.fill(str(content), width=100))We set up our environment by installing Griptape and initializing a local Hugging Face driver. We configure a helper function to display outputs cleanly, allowing us to follow each step of the workflow. As we build the foundation, we ensure everything runs locally without relying on external APIs. Check out the FULL CODES here.

math_agent = Agent(

prompt_driver=local_driver,

tools=[CalculatorTool()],

)

math_response = math_agent.run(

"Compute (37*19)/7 and explain the steps briefly."

)

show("Agent + CalculatorTool", math_response.output.value)We create an agent equipped with a calculator tool and test it with a simple mathematical prompt. We observe how the agent delegates computation to the tool and then formulates a natural-language explanation. By running this, we validate that our local driver and tool integration work correctly. Check out the FULL CODES here.

world_task = PromptTask(

input="Create a vivid fictional world using these cues: {{ args[0] }}.\nDescribe geography, culture, and conflicts in 3–5 paragraphs.",

id="world",

prompt_driver=local_driver,

)

def character_task(task_id, name):

return PromptTask(

input=(

"Based on the world below, invent a detailed character named {{ name }}.\n"

"World description:\n{{ parent_outputs['world'] }}\n\n"

"Describe their background, desires, flaws, and one secret."

),

id=task_id,

parent_ids=["world"],

prompt_driver=local_driver,

context={"name": name},

)

scotty_task = character_task("scotty", "Scotty")

annie_task = character_task("annie", "Annie")We build the world-generation task and dynamically construct character-generation tasks that depend on the world’s output. We define a reusable function to create character tasks conditioned on shared context. As we assemble these components, we see how the workflow begins to take shape through hierarchical dependencies. Check out the FULL CODES here.

style_ruleset = Ruleset(

name="StoryStyle",

rules=[

Rule("Write in a cinematic, emotionally engaging style."),

Rule("Avoid explicit gore or graphic violence."),

Rule("Keep the story between 400 and 700 words."),

],

)

story_task = PromptTask(

input=(

"Write a complete short story using the following elements.\n\n"

"World:\n{{ parent_outputs['world'] }}\n\n"

"Character 1 (Scotty):\n{{ parent_outputs['scotty'] }}\n\n"

"Character 2 (Annie):\n{{ parent_outputs['annie'] }}\n\n"

"The story must have a clear beginning, middle, and end, with a meaningful character decision near the climax."

),

id="story",

parent_ids=["world", "scotty", "annie"],

prompt_driver=local_driver,

rulesets=[style_ruleset],

)

story_workflow = Workflow(tasks=[world_task, scotty_task, annie_task, story_task])

topic = "tidally locked ocean world with floating cities powered by storms"

story_workflow.run(topic)We introduce stylistic rules and create the final storytelling task that merges worldbuilding and characters into a coherent narrative. We then assemble all tasks into a workflow and run it with a chosen topic. Through this, we witness how Griptape chains multiple prompts into a structured creative pipeline. Check out the FULL CODES here.

world_text = world_task.output.value

scotty_text = scotty_task.output.value

annie_text = annie_task.output.value

story_text = story_task.output.value

show("Generated World", world_text)

show("Character: Scotty", scotty_text)

show("Character: Annie", annie_text)

show("Final Story", story_text)

def summarize_story(text):

paragraphs = [p for p in text.split("\n") if p.strip()]

length = len(text.split())

structure_score = min(len(paragraphs), 10)

return {

"word_count": length,

"paragraphs": len(paragraphs),

"structure_score_0_to_10": structure_score,

}

metrics = summarize_story(story_text)

show("Story Metrics", metrics)We retrieve all generated outputs and display the world, characters, and final story. We also compute simple metrics to evaluate structure and length, giving us a quick analytical summary. As we wrap up, we observe that the full workflow produces measurable, interpretable results.

In conclusion, we demonstrate how easily we can orchestrate complex reasoning steps, tool interactions, and creative generation using local models within the Griptape framework. We experience how modular tasks, rulesets, and workflows merge into a powerful agentic system capable of producing structured narrative outputs. By running everything without external APIs, we gain full control, reproducibility, and flexibility, opening the door to more advanced experiments in local agent pipelines, automated writing systems, and multi-task orchestration.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.