The AI coding landscape just got a massive shake-up. If you’ve been relying on Claude 3.5 Sonnet or GPT-4o for your dev workflows, you know the pain: great performance often comes with a bill that makes your wallet weep, or latency that breaks your flow.This article provides a technical overview of MiniMax-M2, focusing on its core design choices and capabilities, and how it changes the price to performance baseline for agentic coding workflows.

Branded as ‘Mini Price, Max Performance,’ MiniMax-M2 targets agentic coding workloads with around 2x the speed of leading competitors at roughly 8% of their price. The key change is not only cost efficiency, but a different computational and reasoning pattern in how the model structures and executes its “thinking” during complex tool and code workflows.

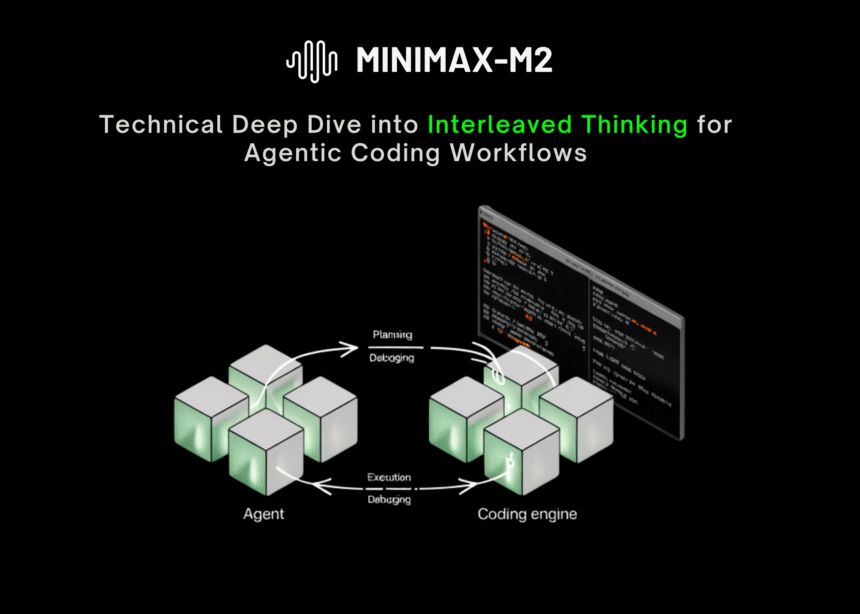

The Secret Sauce: Interleaved Thinking

The standout feature of MiniMax-M2 is its native mastery of Interleaved Thinking.

But what does that actually mean?

Most LLMs operate in a linear “Chain of Thought” (CoT) where they do all their planning upfront and then fire off a series of tool calls (like running code or searching the web). The problem? If the first tool call returns unexpected data, the initial plan becomes stale, leading to “state drift” where the model keeps hallucinating a path that no longer exists.

Interleaved Thinking changes the game by creating a dynamic Plan -> Act-> Reflect loop.

Instead of front-loading all the logic, MiniMax-M2 alternates between explicit reasoning and tool use. It reasons, executes a tool, reads the output, and then reasons again based on that fresh evidence. This allows the model to:

- Self-Correct: If a shell command fails, it reads the error and adjusts its next move immediately.

- Preserve State: It carries forward hypotheses and constraints between steps, preventing the “memory loss” common in long coding tasks.

- Handle Long Horizons: This approach is critical for complex agentic workflows (like building an entire app feature) where the path isn’t clear from step one.

Benchmarks show the impact is real: enabling Interleaved Thinking boosted MiniMax-M2’s score on SWE-Bench Verified by over 3% and on BrowseComp by a massive 40%.

Powered by Mixture of Experts MoE: Speed Meets Smarts

How does MiniMax-M2 achieve low latency while being smart enough to replace a senior dev? The answer lies in its Mixture of Experts (MoE) architecture.

MiniMax-M2 is a massive model with 230 billion total parameters, but it utilizes a “sparse” activation technique. For any given token generation, it only activates 10 billion parameters.

This design delivers the best of both worlds:

- Huge Knowledge Base: You get the deep world knowledge and reasoning capacity of a 200B+ model.

- Blazing Speed: Inference runs with the lightness of a 10B model, enabling high throughput and low latency.

For interactive agents like Claude Code, Cursor, or Cline, this speed is non-negotiable. You need the model to think, code, and debug in real-time without the “thinking…” spinner of death.

Agent & Code Native

MiniMax-M2 wasn’t just trained on text; it was developed for end-to-end developer workflows. It excels at handling robust toolchains including MCP (Model Context Protocol), shell execution, browser retrieval, and complex codebases.

It is already being integrated into the heavy hitters of the AI coding world:

- Claude Code

- Cursor

- Cline

- Kilo Code

- Droid

The Economics: 90% Cheaper than the Competition

The pricing structure is perhaps the most aggressive we’ve seen for a model of this caliber. MiniMax is practically giving away “intelligence” compared to the current market leaders.

API Pricing (vs Claude 3.5 Sonnet):

- Input Tokens: $0.3 / Million (10% of Sonnet’s cost)

- Cache Hits: $0.03 / Million (10% of Sonnet’s cost)

- Output Tokens: $1.2 / Million (8% of Sonnet’s cost)

For individual developers, they offer tiered Coding Plans that undercut the market significantly:

- Starter: $10/month (Includes a $2 first-month promo).

- Pro: $20/month.

- Max: $50/month (Up to 5x the usage limit of Claude Code Max).

The company is seeking developers with proven open-source experience who are already familiar with MiniMax models and active on platforms like GitHub and Hugging Face.

Key Program Highlights:

- The Incentives: Ambassadors receive complimentary access to the MiniMax-M2 Max Coding Plan, early access to unreleased video and audio models, direct feedback channels with product leads, and potential full-time career opportunities.

- The Role: Participants are expected to build public demos, create open-source tools, and provide critical feedback on APIs before public launches.

You can sign up here.

Editorial Notes

MiniMax-M2 challenges the idea that “smarter” must mean “slower” or “more expensive.” By leveraging MOE efficiency and Interleaved Thinking, it offers a compelling alternative for developers who want to run autonomous agents without bankrupting their API budget.

As we move toward a world where AI agents don’t just write code but architect entire systems, the ability to “think, act, and reflect” continuously, at a price that allows for thousands of iterations, might just make M2 the new standard for AI engineering.

Thanks to the MINIMAX AI team for the thought leadership/ Resources for this article. MINIMAX AI team has supported this content/article.