Environmental scientists are increasingly using enormous artificial intelligence models to make predictions about changes in weather and climate, but a new study by MIT researchers shows that bigger models are not always better.

The team demonstrates that, in certain climate scenarios, much simpler, physics-based models can generate more accurate predictions than state-of-the-art deep-learning models.

Their analysis also reveals that a benchmarking technique commonly used to evaluate machine-learning techniques for climate predictions can be distorted by natural variations in the data, like fluctuations in weather patterns. This could lead someone to believe a deep-learning model makes more accurate predictions when that is not the case.

The researchers developed a more robust way of evaluating these techniques, which shows that, while simple models are more accurate when estimating regional surface temperatures, deep-learning approaches can be the best choice for estimating local rainfall.

They used these results to enhance a simulation tool known as a climate emulator, which can rapidly simulate the effect of human activities onto a future climate.

The researchers see their work as a “cautionary tale” about the risk of deploying large AI models for climate science. While deep-learning models have shown incredible success in domains such as natural language, climate science contains a proven set of physical laws and approximations, and the challenge becomes how to incorporate those into AI models.

“We are trying to develop models that are going to be useful and relevant for the kinds of things that decision-makers need going forward when making climate policy choices. While it might be attractive to use the latest, big-picture machine-learning model on a climate problem, what this study shows is that stepping back and really thinking about the problem fundamentals is important and useful,” says study senior author Noelle Selin, a professor in the MIT Institute for Data, Systems, and Society (IDSS) and the Department of Earth, Atmospheric and Planetary Sciences (EAPS).

Selin’s co-authors are lead author Björn Lütjens, a former EAPS postdoc who is now a research scientist at IBM Research; senior author Raffaele Ferrari, the Cecil and Ida Green Professor of Oceanography in EAPS and director of the MIT Program in Atmospheres, Oceans, and Climate; and Duncan Watson-Parris, assistant professor at the University of California at San Diego. Selin and Ferrari are also co-principal investigators of the Bringing Computation to the Climate Challenge project, out of which this research emerged. The paper appears today in the Journal of Advances in Modeling Earth Systems.

Comparing emulators

Because the Earth’s climate is so complex, running a state-of-the-art climate model to predict how pollution levels will impact environmental factors like temperature can take weeks on the world’s most powerful supercomputers.

Scientists often create climate emulators, simpler approximations of a state-of-the art climate model, which are faster and more accessible. A policymaker could use a climate emulator to see how alternative assumptions on greenhouse gas emissions would affect future temperatures, helping them develop regulations.

But an emulator isn’t very useful if it makes inaccurate predictions about the local impacts of climate change. While deep learning has become increasingly popular for emulation, few studies have explored whether these models perform better than tried-and-true approaches.

The MIT researchers performed such a study. They compared a traditional technique called linear pattern scaling (LPS) with a deep-learning model using a common benchmark dataset for evaluating climate emulators.

Their results showed that LPS outperformed deep-learning models on predicting nearly all parameters they tested, including temperature and precipitation.

“Large AI methods are very appealing to scientists, but they rarely solve a completely new problem, so implementing an existing solution first is necessary to find out whether the complex machine-learning approach actually improves upon it,” says Lütjens.

Some initial results seemed to fly in the face of the researchers’ domain knowledge. The powerful deep-learning model should have been more accurate when making predictions about precipitation, since those data don’t follow a linear pattern.

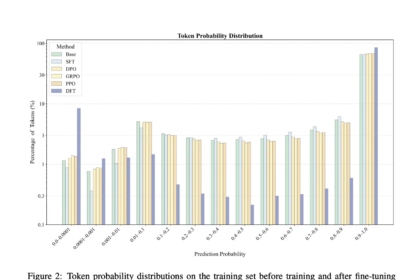

They found that the high amount of natural variability in climate model runs can cause the deep learning model to perform poorly on unpredictable long-term oscillations, like El Niño/La Niña. This skews the benchmarking scores in favor of LPS, which averages out those oscillations.

Constructing a new evaluation

From there, the researchers constructed a new evaluation with more data that address natural climate variability. With this new evaluation, the deep-learning model performed slightly better than LPS for local precipitation, but LPS was still more accurate for temperature predictions.

“It is important to use the modeling tool that is right for the problem, but in order to do that you also have to set up the problem the right way in the first place,” Selin says.

Based on these results, the researchers incorporated LPS into a climate emulation platform to predict local temperature changes in different emission scenarios.

“We are not advocating that LPS should always be the goal. It still has limitations. For instance, LPS doesn’t predict variability or extreme weather events,” Ferrari adds.

Rather, they hope their results emphasize the need to develop better benchmarking techniques, which could provide a fuller picture of which climate emulation technique is best suited for a particular situation.

“With an improved climate emulation benchmark, we could use more complex machine-learning methods to explore problems that are currently very hard to address, like the impacts of aerosols or estimations of extreme precipitation,” Lütjens says.

Ultimately, more accurate benchmarking techniques will help ensure policymakers are making decisions based on the best available information.

The researchers hope others build on their analysis, perhaps by studying additional improvements to climate emulation methods and benchmarks. Such research could explore impact-oriented metrics like drought indicators and wildfire risks, or new variables like regional wind speeds.

This research is funded, in part, by Schmidt Sciences, LLC, and is part of the MIT Climate Grand Challenges team for “Bringing Computation to the Climate Challenge.”